Before proceeding to the measurement of information, let's introduce a definition and see what we are dealing with.

Definition

Information is information, messages, dataall its manifestations, forms, regardless of their content. Even a complete rubbish written on a piece of paper can be considered information. However, this definition is from Russian federal law.

The following values can be distinguished from international standards:

- knowledge about objects, facts, ideas, values, opinions that people exchange in a specific context;

- knowledge of facts, events, meanings, things, concepts, which in a particular context have a definite meaning.

Data is a materialized form of presenting information, although in some texts these two concepts can be used as synonyms.

Measurement Methods

The concept of information is defined differently. It is also measured in different ways. The following main approaches to the measurement of information can be distinguished:

- Alphabetical approach.

- Probabilistic approach.

- A meaningful approach to the measurement of information.

They all correspond to different definitions andhave different authors whose opinions regarding the data differed. A probabilistic approach was created by A.N. Kolmogorov did not take into account the subject of information transfer, that is, he measures its quantity regardless of how important it is for the subject transmitting and receiving it. A meaningful approach to measuring information, created by C. Shannon, takes into account more variables and is a kind of assessment of the importance of this data for the receiving party. But let's look at everything in order.

Probabilistic approach

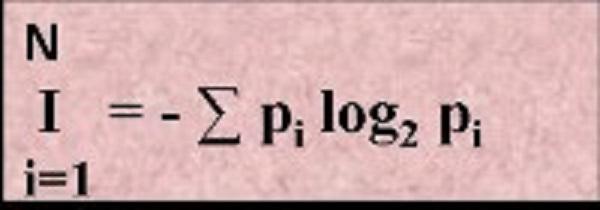

As already mentioned, approaches to measurementamounts of information vary greatly. This approach was developed by Shannon in 1948. It lies in the fact that the amount of information depends on the number of events and their probability. The amount of information obtained with this approach can be calculated using the following formula, in which I is the quantity sought, N is the number of events and pand - this is the probability of each particular event.

Alphabet

Абсолютно самодостаточный метод вычисления amount of information. It does not take into account what is written in the message, and does not associate the amount written with the content. To calculate the amount of information we need to know the power of the alphabet and the amount of text. In fact, the power of the alphabet is unlimited. However, computers use a sufficient alphabet with a capacity of 256 characters. Thus, we can calculate how much information carries one character of typed text on a computer. Since 256 = 28 then one character is 8 bits of data.

1 bit is the minimum, indivisible amount of information. According to Shannon, this is a quantity of data that reduces the uncertainty of knowledge twice.

8bit = 1 byte.

1024 bytes = 1 kilobyte.

1024 kilobytes = 1 megabyte.

Think

As you can see, approaches to measuring information are verydiffer. There is another way to measure its quantity. It allows you to assess not only the quantity but also the quality. A meaningful approach to measuring information allows us to take into account the usefulness of the data. Also this approach means that the amount of information contained in the message is determined by the amount of new knowledge that a person will receive.

If expressed in mathematical formulas, thenthe amount of information equal to 1bit should reduce the uncertainty of a person’s knowledge by 2 times. Thus, we use the following formula to determine the amount of information:

X = log2H, where X is the amount of data received, and H is the number of equally probable outcomes. For example, solve the puzzle.

Suppose we have a three-sided pyramid with fourby the parties. When you toss it up there is a chance that it will fall on one of the four sides. Thus, H = 4 (the number of equiprobable outcomes). As you understand, the chance that our object will fall on one of the faces and so will remain to stand, less than if you toss a coin and expect it to rise edge.

Decision. X = log2H = log24 = 2.

As you can see, the result is 2. But what is this figure? As already mentioned, the minimum indivisible unit of measurement is a bit. As a result, after the fall, we received 2 bits of information.

Approaches to measuring information use logarithms for calculations. To simplify these actions, you can use a calculator or a special table of logarithms.

Practice

Where you can benefit from the knowledge gained inthis article, especially data about a meaningful approach to the measurement of information? Without a doubt, in the exam in computer science. The considered question allows better orientation in computer technology, in particular, in the size of internal and external memory. In fact, this knowledge of practical value does not have, except in science. No employer will force you to calculate the amount of information in a printed document or written program. Is that in programming, where you will need to set the size of the memory allocated for the variable.